Difference between revisions of "Instruction:Ee2f82a9-c196-4e2c-a7c8-1e3bc14f1a34"

| Line 144: | Line 144: | ||

{{Instruction Step Trainee | {{Instruction Step Trainee | ||

|Instruction Step Title=APPENDIX – portfolio template example | |Instruction Step Title=APPENDIX – portfolio template example | ||

| − | |Instruction Step Text=Research ethics/integrity workshop e-portfolio | + | |Instruction Step Text='''Research ethics/integrity workshop e-portfolio''' |

| − | Group members: ……………………………………………………………………… | + | '''Group members:''' ……………………………………………………………………… |

| − | Assignment 1 | + | '''Assignment 1''' |

There are specific ethical issues associated with each phase of research. Please add the case that your team chose for your phase into the textbox below and answer the following questions: | There are specific ethical issues associated with each phase of research. Please add the case that your team chose for your phase into the textbox below and answer the following questions: | ||

| Line 157: | Line 157: | ||

*Also reflect on how these problems may be interrelated or interdependent or how one can originate from another. | *Also reflect on how these problems may be interrelated or interdependent or how one can originate from another. | ||

| − | Assignment 2 | + | '''Assignment 2''' |

Now that you have gotten acquainted with the case and thought about the ethical issues that may arise (assignment 1), please review the complimentary questions in Assignment 2. Copy the questions into the text-box below (“Questions”) and write your answers into the next text-box (“Answers”). Try to initially answer the questions based on your current knowledge of the subject and afterwards supplement or correct your answers using the support material in Assignment 3. | Now that you have gotten acquainted with the case and thought about the ethical issues that may arise (assignment 1), please review the complimentary questions in Assignment 2. Copy the questions into the text-box below (“Questions”) and write your answers into the next text-box (“Answers”). Try to initially answer the questions based on your current knowledge of the subject and afterwards supplement or correct your answers using the support material in Assignment 3. | ||

| Line 177: | Line 177: | ||

#... | #... | ||

{{!}}} | {{!}}} | ||

| − | Assignment 3 | + | '''Assignment 3''' |

Once you have reviewed and discussed all of the questions among yourselves and formed an understanding of the scope of your current knowledge and where it falls short, open the support material on the next page of the website and supplement the answers in the previous textbox (Assignment 2) – please use a different colour for this (i.e. blue). | Once you have reviewed and discussed all of the questions among yourselves and formed an understanding of the scope of your current knowledge and where it falls short, open the support material on the next page of the website and supplement the answers in the previous textbox (Assignment 2) – please use a different colour for this (i.e. blue). | ||

| − | Assignment 4 | + | '''Assignment 4''' |

At this point you have addressed potential ethical issues, focused your thoughts on the details of these problems and supplemented your knowledge on the subject. The last step is to think back on the initial case that you reviewed in assignment 1 and form an overview of aspects that you may have initially missed, think of the wider implications of these ethical issues and reflect on how they may affect your own work now or in the future. | At this point you have addressed potential ethical issues, focused your thoughts on the details of these problems and supplemented your knowledge on the subject. The last step is to think back on the initial case that you reviewed in assignment 1 and form an overview of aspects that you may have initially missed, think of the wider implications of these ethical issues and reflect on how they may affect your own work now or in the future. | ||

| Line 200: | Line 200: | ||

{{!}}- | {{!}}- | ||

{{!}} | {{!}} | ||

| + | |||

| + | |||

| + | |||

| + | |||

{{!}}} | {{!}}} | ||

| − | Self-reflection | + | '''Self-reflection''' |

Finally, individually fill out the web-based [https://docs.google.com/forms/d/e/1FAIpQLScL8RNWKLWBJriOmYp0Ix5Bob1KVv04W_4lagFaRKRpXxLKpA/viewform?usp=sf_link self-reflection] and copy your results below: | Finally, individually fill out the web-based [https://docs.google.com/forms/d/e/1FAIpQLScL8RNWKLWBJriOmYp0Ix5Bob1KVv04W_4lagFaRKRpXxLKpA/viewform?usp=sf_link self-reflection] and copy your results below: | ||

{{{!}} class="wikitable" | {{{!}} class="wikitable" | ||

{{!}}+ | {{!}}+ | ||

| − | {{!}}Participant 1: | + | {{!}}'''Participant 1:''' |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | '''<br /> | ||

| + | Participant 2:''' | ||

| − | Participant | + | '''<br /> |

| + | Participant 3:''' | ||

| + | '''<br /> | ||

| + | Participant 4:''' | ||

| − | Participant 5: | + | '''<br /> |

| + | Participant 5:''' | ||

{{!}}} | {{!}}} | ||

}} | }} | ||

Latest revision as of 12:32, 18 November 2025

Measurement tools for collecting learning outcomes: long-term effect

What is this about?

This module will give an overview of measurement tools and evaluate the possible use of the identified measurement methods for long-term effects.

We have divided the tools according to Kirkpatrick’s framework (1959) for training effectiveness. The framework has been used for training evaluation in REI context (Steele et al., 2016; Stoesz & Yudintseva, 2018) as well as HE context (Praslova, 2010), and includes the following levels (different kinds of tools may provide information about the achievement of the level):

- reactions (participants’ self-assessment) – different kinds of instrument may be used to collect learners’ affective and utility judgements;

- learning process (knowledge, content) – content tests, performance tasks, other course-work that is graded/evaluated, pre-post texts (tests);

- behaviour and practices (acting in the research community) – end-of-programme/course integration paper/project, learning diaries/journals (kept over a longer period), documentation of integrative work, tasks completed as part of other courses;

- results (e.g. institutional outcomes) – results can be monitored via alumni and employer surveys, media coverage, awards or recognition. In addition, nation-wide surveys may indicate the ‘health’ of RE/RI.

National survey

While national REI surveys (barometers) may not address training directly, they can be used as macro-level long-term reflections of the state of REI in a given context, and as such they may also be reflections of whether training efforts, in a broad sense, have been efficient (Kirkpatrick’s level 4). As the evaluation of training effect cannot be tied to specific training at this macro-level, it may provide indications of the extent of challenges, which could have or can be alleviated through training, and point a direction of training needs. For instance, surveys usually collect information about participation in trainings and ask how confident researchers feel in dealing with ethical issues during their research (statistical data analysis).

In addition, national as well as institutional surveys may provide an opportunity to collect cases of questionable practices and future trainings could address those topics. For collecting cases researchers consider confusing or problematic, open answer questions could be added in the surveys.

In addition, the health of the entire research community can be evaluated by monitoring the leadership aspect in the surveys. For analysing this aspect, a REI Leadership framework (Tammeleht et al., 2022, submitted) can be used. The meta-analysis provides information about the wider impact of research practices in researcher institutions, but also helps institutional leaders support everyone in their organisation to obtain ethical research practices.

This tool is suitable for use in training with ECRs and active researchers.Retention check

One option to measure effectiveness of a learning process and outcomes is to check multiple points during the learning process and after the intervention. Effectiveness of REI training can also be deducted based on the quality of ethics sections in published articles and dissertations. The goal is to measure if the ethical competencies acquired during training (and other activities) have been retained and in use (Kirpatrick’s level 3).

This kind of data collection requires some planning from the facilitator – text collection measures should be planned (a Moodle course may be convenient as all submissions by the same person will accumulate there); group size should be considered (in case of large groups it may be too time-consuming to keep an eye on many submissions); perhaps colleagues can collaborate to collect texts (e.g. one text collected during the course and another later during another course). In case of articles published or dissertations defended by the researchers in the same institution, the quality and content of the ethics sections may shed light on the practices prevalent in that community.

Texts collected at various points can be analysed using the SOLO taxonomy, reflection levels (if relevant), content criteria (ethical principles, ethical analysis, ethical approaches). Ethics sections can be analysed based on the framework analysing ethics content (Cronqvist, 2024).

This tool is suitable for use in training for various target groups, but it may be challenging to reach the participants of prior trainings.Using vignettes to measure general ethical sensitivity in the research community

Vignettes can be integrated in various contexts, like team training sessions, institutional or national surveys and so on. While the use of vignettes is quite common in the training contexts, comments collected on vignettes is not very common in non-training contexts (e.g. as part of national REI surveys, team meetings, conferences). Still, the comments collected on the vignettes may prove to be a great source of information about the respondents’ attitudes, beliefs, knowledge and ethical sensitivity (Kirkpatrick’s level 3).

Vignettes contain a situation with one or several ethical aspects and there can be a straightforward solution or not. There are several measures to gauge ethical sensitivity with vignettes – for example, Likert scale can be used to indicate how ethical the situation seems to the respondent. An open-answer option could be added, and research indicates (Parder et al., 2024; Tammeleht et al., forthcoming) that open responses reveal more about ethical sensitivity than quantitative data.

Implementing vignettes into various surveys or team meetings/conferences requires some preparation from the facilitators, but collecting responses and comments is quite simple. Analysing results may take some time, especially in case open answers are scrutinised. We recommend using an EASM (Ethical Awareness and Sensitivity Meter) for measuring the level on sensitivity in the open answers. Content criteria (ethical principles, ethical analysis, ethical approaches) or recognising the topics present in the vignette (similar to the domain-specific measure).

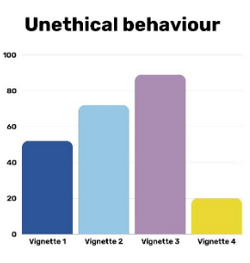

For example, Estonian national REI survey included four vignettes. The survey asked respondents to indicate the ethicality of the situation on a Likert scale (1-6). The results (of statistical analysis) show (figure 1) that the ethicality of vignettes was evaluated on different levels, some topics were considered more unethical than others.

Figure 1. Unethical behaviour identified on a Likert scale (all unethical indications) (from Parder et al., 2024).

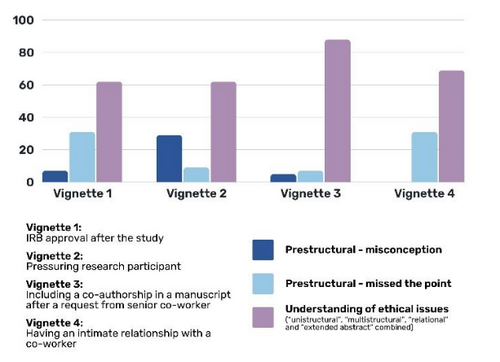

Then the respondents had a chance to add a comment – this was optional but about a half of the respondents used the opportunity (which may indicate some ethical sensitivity). Open comments were analysed based on the EASM and the picture looked a bit different (see figure 2). Based on the Likert scale results, vignette 4 was not considered very unethical (or not connected to ethics). Open answers revealed that 70% of respondents actually considered the situation to be ethical in nature and showed understanding of the topic. It also became clear that 30% of respondents had completely missed the topic, meaning they had not understood the situation from the ethical perspective.

Figure 2. Analysis of open comments to the vignettes (from Parder et al., 2024).

Overall, it can be concluded that the identified misconceptions and not noticing ethical issues (both on the prestructural level in the SOLO taxonomy) may be the indication that training might be needed to clarify the topics.

This tool is suitable for use in training for ECRs, supervisors/mentors and expert researchers.Conclusion and recommendations

Table 4 summarises the tools for measuring REI training effectiveness in HE context. Limitations Limitations of measurement tools and analysis instruments are that there are not many large-scale feasible measurements available. In addition, the entire ethics infrastructure may have an impact on how people behave in the research community, so the effectiveness may be influenced by a wider community beyond training. Moreover, effectiveness can be interpreted in various ways in different context. Our focus has been on learning outcomes, and we have operationalised this through an established framework for on levels of training effectiveness (Praslova 2010/Kirkpatrick, 1959).

References

Bell, A., Kelton, J., McDonagh, N., Mladenovic, R., & Morrison, K. (2011). A critical evaluation of the usefulness of a coding scheme to categorise levels of reflective thinking. Assessment & Evaluation in Higher Education, 36(7), 797-815.

Biggs, J. and Collis. K. (1982). Evaluating the quality of learning: The SOLO taxonomy. New York: Academic Press.

Biggs, J. and Tang, C. (2007). Teaching for Quality Learning at University (3rd ed.) Buckingham: SRHE and Open University Press.

Chejara, P., Prieto, L. P., Ruiz-Calleja, A., Rodríguez-Triana, M. J., Shankar, S. K. & Kasepalu, R. (2021). EFAR-MMLA: An Evaluation Framework to Assess and Report Generalizability of Machine Learning Models in MMLA. Sensors, 21(8), 2863.

Conqvist, M. (2024). Research ethics in Swedish dissertations in educational sciences – a matter of confusion. Nordic Conference of PhD Supervision, 2024. Karlstad, Sweden.

DeLozier, S. J., & Rhodes, M. G. (2017). Flipped classrooms: A review of key ideas and recommendations for practice. Educational psychology review, 29(1), 141-151.

Gibbs, P., Costley. C., Armsby, P. and Trakakis, A. (2007). Developing the ethics of worker-researchers through phronesis. Teaching in Higher Education, 12(3), 365–375. https://doi.org/10.1080/13562510701278716

Hannula. M. S., Haataja, E., Löfström, E., Garcia Moreno-Esteva, E., Salminen-Saari, J., & Laine, A. (2022). Advancing video research methodology to capture the processes of social interaction and multimodality. ZDM Mathematics Education 54, 433–443. https://doi.org/10.1007/s11858-021-01323-5

Jordan, J. (2007). Taking the first step towards a moral action: A review of moral sensitivity across domains. Journal of Genetic Psychology, 168, 323–359.

Kember, David. 1999. Determining the level of reflective thinking from students' written journals using a coding scheme based on the work of Mezirow. International Journal of Lifelong Education, 18(1), 18–30. https://doi.org/10.1080/026013799293928.

Kember, D., Jones, A., Loke, A., McKay, J., Sinclair, K., Tse, H., Webb, C., Wong, F., Wong, M., and Yeung, E. (1999). Determining the level of reflective thinking from students’ written journals using a coding scheme based on the work of Mezirow. International Journal of Lifelong Education, 18(1), 18–30.

Kember, D., Leung, D. Y. P., Jones, A., Loke, A. Y., McKay, J., Sinclair, K., Tse, H., Webb, C., Wong, F. K. Y., Wong, M., and Yeung, E. (2000). Development of a questionnaire to measure the level of reflective thinking. Assessment & Evaluation in Higher Education, 25(4), 381–395. https://doi.org/10.1080/713611442.

Kember, D., McKay, J., Sinclair, K., & Wong, F. K. Y. (2008). A four-category scheme for coding and assessing the level of reflection. Assessment & Evaluation in Higher Education, 33(4), 369-379.

Kirkpatrick, D. L. (1959). Techniques for evaluation training programs. Journal of American Society of Training Directions, 13, 21–26.

Kitchener, K. S. (1985). Ethical principles and ethical decisions in student affairs. In Applied ethics in student services: new directions for student services, number. 30 (pp. 17–29). San Francisco: Jossey-Bass.

Löfström, E., (2012) Students’ Ethical Awareness and Conceptions of Research Ethics. Ethics & Behavior,22(5), 349-361. DOI: 10.1080/10508422.2012.679136

Löfström, E. and Tammeleht, A. (2023). A pedagogy for teaching research ethics and integrity in the social sciences: case-based and collaborative learning. In Academic Integrity in the Social Sciences (pp.127−145).

Mezirow, J. (1991). Transformative dimensions in adult learning. San Francisco: Jossey-Bass.

Mustajoki, H. and Mustajoki, A. (2017). A new approach to research ethics: Using guided dialogue to strengthen research communities. Taylor & Francis. https://doi.org/10.4324/9781315545318 .

Parder, M.-L., Tammeleht, A., Rajando, K. and Simm, K. (2024). Using vignettes for assessing ethical sensitivity in the national research ethics and integrity study. Poster presentation at the World Conference of Research Integrity, 2024. Athens, Greece.

Praslova, L. (2010). Adaptation of Kirkpatrick’s four level model of training criteria to assessment of learning outcomes and program evaluation in Higher Education. Educational Assessment, Evaluation and Accountability, 22, 215–225. https://doi.org/10.1007/s11092-010-9098-7

Salminen, A., & Pitkänen, L. (2020). Finnish research integrity barometer 2018. Finnish National Board on Research Integrity TENK publications, 2-2020.

Steele, L. M., Mulhearn, T. J., Medeiros, K. E., Watts, L. L., Connelly, S., and Mumford, M. D. (2016). How do we know what works? A review and critique of current practices in ethics training evaluation. Accountability in Research, 23(6), 319–350. https://doi.org/10.1080/08989621.2016.1186547 .

Stoesz, B. M. and Yudintseva, A. (2018). Effectiveness of tutorials for promoting educational integrity: a synthesis paper. International Journal for Educational Integrity, 14(6). https://doi.org/10.1007/s40979-018-0030-0 .

Stolper, M. & Inguaggiato, G. (n.d.) Debate and Dialogue. https://embassy.science/wiki/Instruction:Ac206152-effd-475b-b8cd-7e5861cb65aa

Tammeleht, A., Rodríguez-Triana, M. J., Koort, K., & Löfström, E. (2019). Collaborative case-based learning process in research ethics. International Journal of Educational Integrity, 15(1), 6.

Tammeleht, A., Rodríguez-Triana, M.J., Koort, K., Löfström, E. (2020). Scaffolding collaborative case-based learning in research ethics. Journal of Academic Ethics 19, pages 229–252.

Tammeleht, A., Löfström, E. & Rodríguez-Triana, M. J. (2022). Facilitating development of research ethics leadership competencies. International Journal of Educational Integrity. https://doi.org/10.1007/s40979-022-00102-3

Tammeleht, A. (2022). Facilitating the development of research ethics and integrity competencies through scaffolding and collaborative case-based problem-solving. Helsinki: Unigrafia OY, Helsinki Studies in Education.

Tammeleht, A., J. Antoniou, R. de La C. Bernabe, C. Chapin, S. van den Hooff, V. N. Mbanya, M.-L. Parder, A. Sairio, K. Videnoja, and E. Löfström. (submitted). Manifestations of research ethics and integrity leadership in national surveys - Cases of Estonia, Finland, Norway, France and the Netherlands.

Tammeleht, A., Parder, M.-L., Rajando, K., and Simm, K. (forthcoming). Using vignettes for assessing ethical sensitivity in the national research ethics and integrity survey – an example from Estonia.

Thorpe, K. (2004). Reflective learning journals: From concept to practice. Reflective Practice, 5(3), 327–343. https://doi.org/10.1080/1462394042000270655 .

Tucker, B. (2012). The flipped classroom. Education next, 12(1), 82-83.APPENDIX – portfolio template example

Research ethics/integrity workshop e-portfolio

Group members: ………………………………………………………………………

Assignment 1

There are specific ethical issues associated with each phase of research. Please add the case that your team chose for your phase into the textbox below and answer the following questions:

- Identify any ethical issues or potential problems that you noticed. You may colour, underline, or otherwise mark the appropriate keywords or phrases.

- Discuss [amongst yourselves] and briefly comment why these issues or problems are important. In addition, think of potential risks or detriments that may ensue and how to mitigate them.

- Also reflect on how these problems may be interrelated or interdependent or how one can originate from another.

Assignment 2

Now that you have gotten acquainted with the case and thought about the ethical issues that may arise (assignment 1), please review the complimentary questions in Assignment 2. Copy the questions into the text-box below (“Questions”) and write your answers into the next text-box (“Answers”). Try to initially answer the questions based on your current knowledge of the subject and afterwards supplement or correct your answers using the support material in Assignment 3.

| Questions |

|---|

| Copy the questions into this box |

| Answers |

Write your answers into this box using two different colours for the initial and supplementary answers

|

Assignment 3

Once you have reviewed and discussed all of the questions among yourselves and formed an understanding of the scope of your current knowledge and where it falls short, open the support material on the next page of the website and supplement the answers in the previous textbox (Assignment 2) – please use a different colour for this (i.e. blue).

Assignment 4

At this point you have addressed potential ethical issues, focused your thoughts on the details of these problems and supplemented your knowledge on the subject. The last step is to think back on the initial case that you reviewed in assignment 1 and form an overview of aspects that you may have initially missed, think of the wider implications of these ethical issues and reflect on how they may affect your own work now or in the future.

To guide your discussion, you may use the guideline questions below:

- Which ethical issues or themes do you notice in your chosen case that you missed initially?

- Could any of these aspects be relevant in other situations? How?

- What could be the ramifications of disregarding any of these aspects?

- Are there any aspects that you have personal experience with? Is there any change in how you see them now?

- Which ethical issues could you encounter in the future? How would you handle them?

Discuss and give examples

| Discussion |

|

|

Self-reflection

Finally, individually fill out the web-based self-reflection and copy your results below:

| Participant 1:

|